Some background…

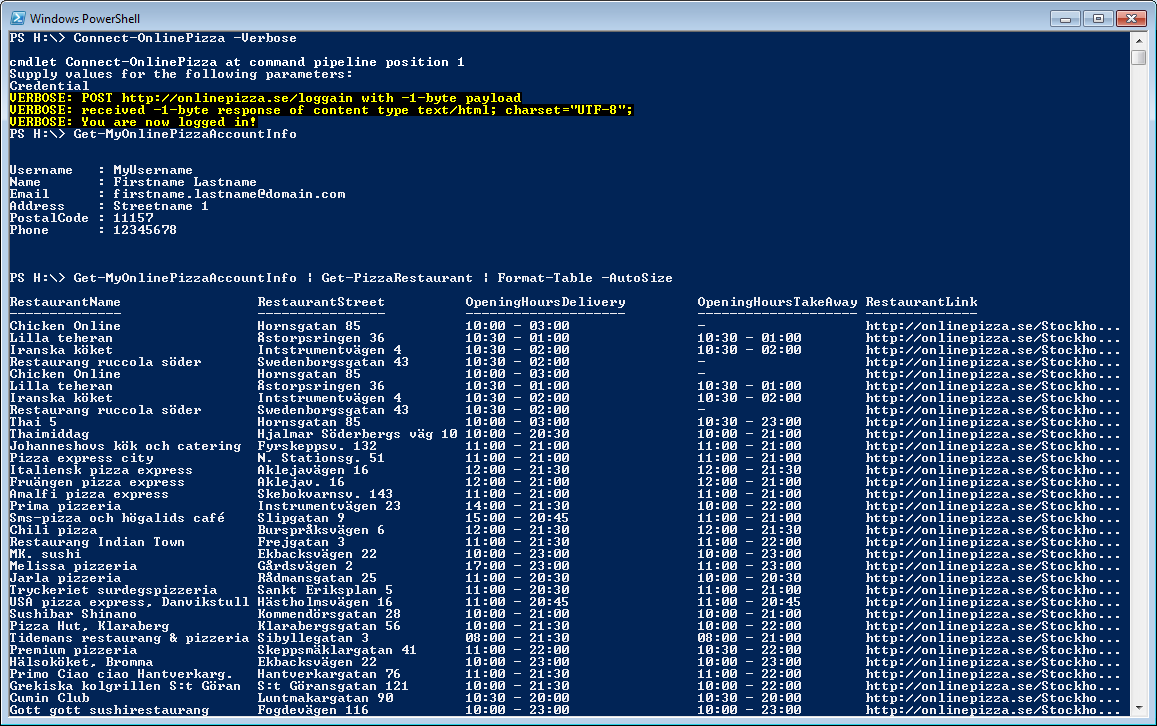

We’ve been buying our groceries online for a few years now. We find it super convenient and it saves us a lot of time. I even created a PowerShell module for it some time ago!

There is one (very minor) annoyance with it though, remembering to use the discount coupons you get after you’ve bought groceries for a certain amount. These coupons or codes get’s sent out before your current order has been delivered which means that you cant add them for your next order (can’t reach checkout while you have an active order waiting for delivery).

This means I have to wait for my order to be delivered and then add to it at the checkout step for my next order, at which point I’ve forgot all about it and maybe even deleted/archived the e-mail containing the pdf-file with the coupon.

I thought of this as the perfect scenario to check out a (relatively) new service from Microsoft called Flow, the idea behind Flow is to make it simple to automate things without the need of writing any code, but that doesn’t mean you can’t do that as well 🙂

How to achieve this?

When building automation I usually try to write down the steps needed to achieve the “end-to-end automation”. In this case that would be:

- Make sure the e-mails containing the coupons can be found automatically

- Get the coupon from the e-mail moved somewhere where it can be accessed by a PowerShell runbook in Azure Automation

- Create a PowerShell function that can parse pdf-files so the code inside can be retrieved

- Create another PowerShell function that can post the code to the online grocery store

- Profit! 🙂

These steps have now been achieved, and here’s how I did it:

Fetching the E-mail and the attachments (Step 1 and 2)

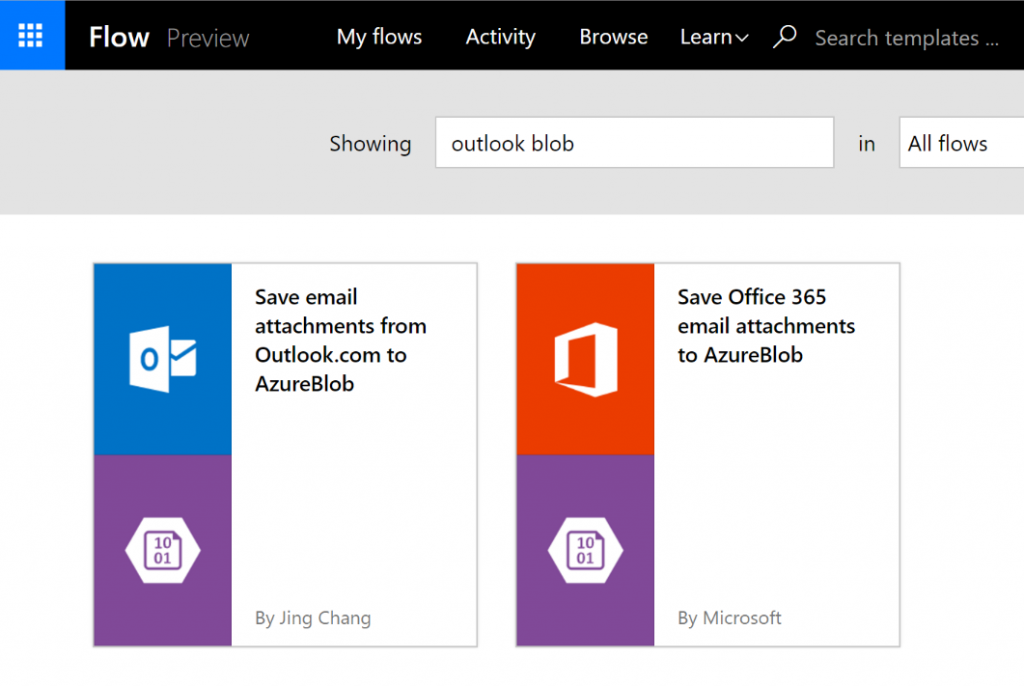

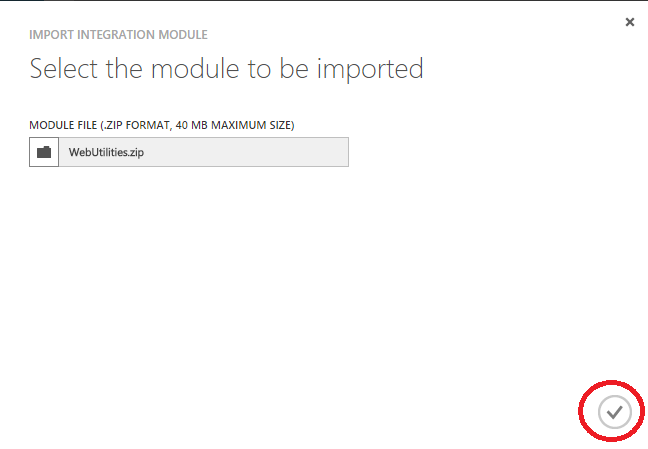

This is amazingly simple using Microsoft Flow. After you’ve signed up and logged in, just go to “My Flows” and click “Create from template”. There are quite a few to pick from so the easiest way to achieve this is to use the search function at the top of page, since I’m using Outlook.com as my personal e-mail provider, and thought the simplest way to store the attachments was using blob storage, I simply searched for “outlook blob” and found these templates I could use:

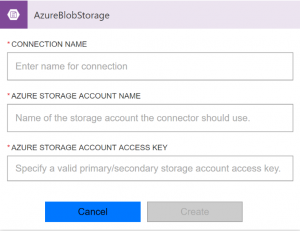

In my case, the first one fits perfectly so that’s the one I chose as a starting point. Click on it, pick “choose this template” and first connect your Azure storage account (needs to be created in advance):

Then connect your e-mail account by logging in:

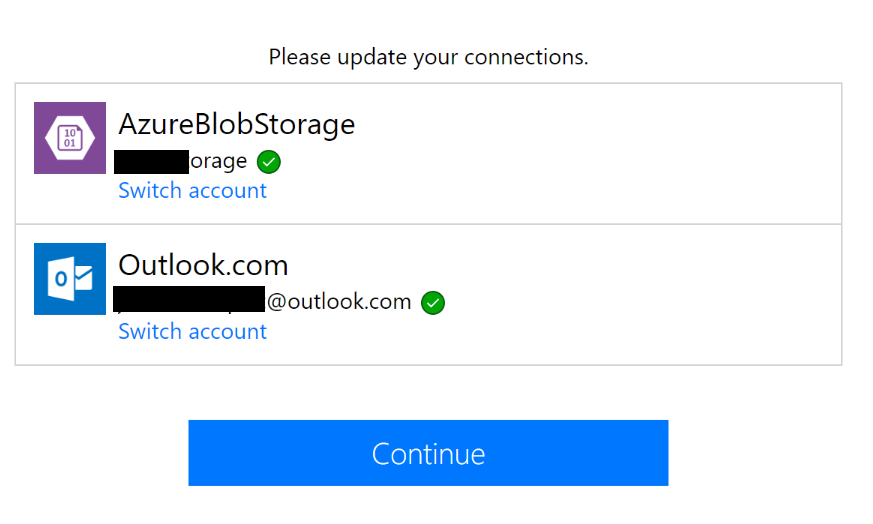

If everything worked, you can go on and press “Continue”

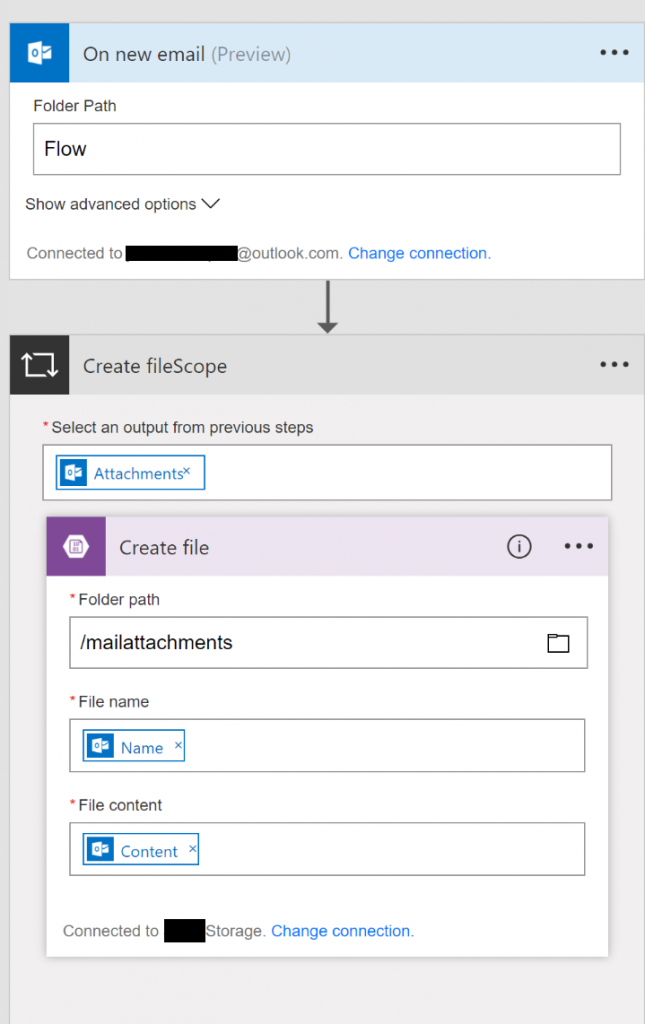

You’ll then arrive at the page where you can configure the different steps in your flow, and if you want to, add some conditions. After you’ve clicked “edit” on both steps and updated them they should look something like this:

As you can see, I changed the folder this flow should look in from “Inbox” to “Flow” to prevent it from harvesting all the attachments I receive. I can then simply add a mail rule to put the e-mails I want in that folder.

Same thing for the “Create file”-step, “mailattachments” should correspond to a container on your storage account.

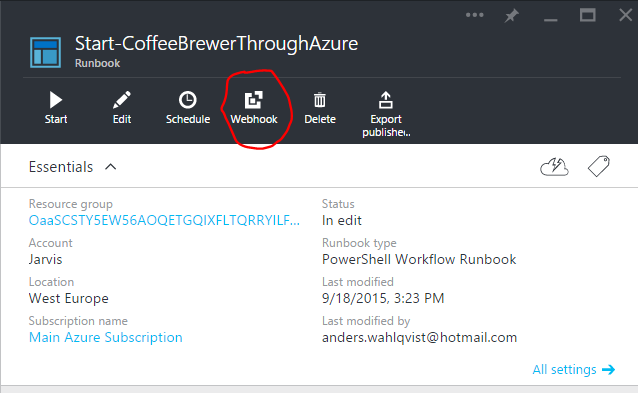

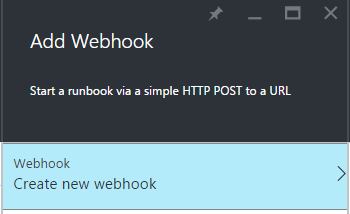

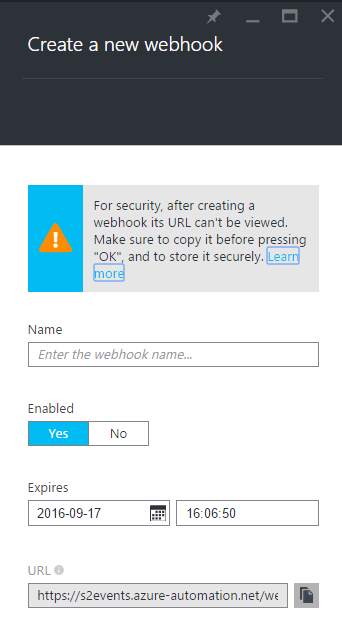

That’s it for parsing the e-mails. If you would like to, you could also add a http request after these steps to trigger the runbook automatically (webhook) as soon as a new attachment has been saved to the blob storage, but in this case, I’ll just schedule that to run at a regular intervall.

Parsing the pdf-file and posting the discount code (step 3, 4 and 5!)

To be able to get text out of the pdf-file I used the iTextSharp library. Then wrap that up in a PowerShell function, which in it’s simplest form might look something like this:

(Code example found at: https://powershell.org/forums/topic/convertfrom-pdf-powershell-cmdlet/)

Add-Type -Path "$PSScriptRoot\itextsharp.dll"

function Get-PdfText

{

[CmdletBinding()]

[OutputType([string])]

param (

[Parameter(Mandatory = $true)]

[string]

$Path

)

$Path = $PSCmdlet.GetUnresolvedProviderPathFromPSPath($Path)

try

{

$reader = New-Object iTextSharp.text.pdf.pdfreader -ArgumentList $Path

}

catch

{

throw

}

$stringBuilder = New-Object System.Text.StringBuilder

for ($page = 1; $page -le $reader.NumberOfPages; $page++)

{

$text = [iTextSharp.text.pdf.parser.PdfTextExtractor]::GetTextFromPage($reader, $page)

$null = $stringBuilder.AppendLine($text)

}

$reader.Close()

return $stringBuilder.ToString()

}

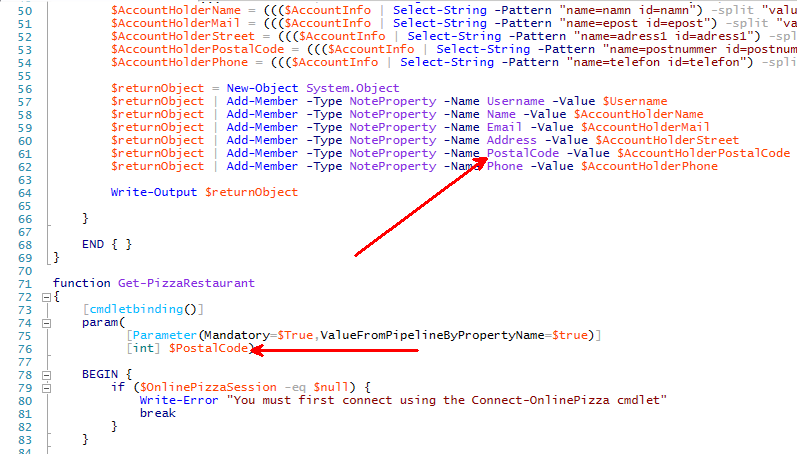

I’ve also added a function called “Add-MatHemBonusCode” to my “Grocery shopping PowerShell module“, because that got to exist, right? 😉

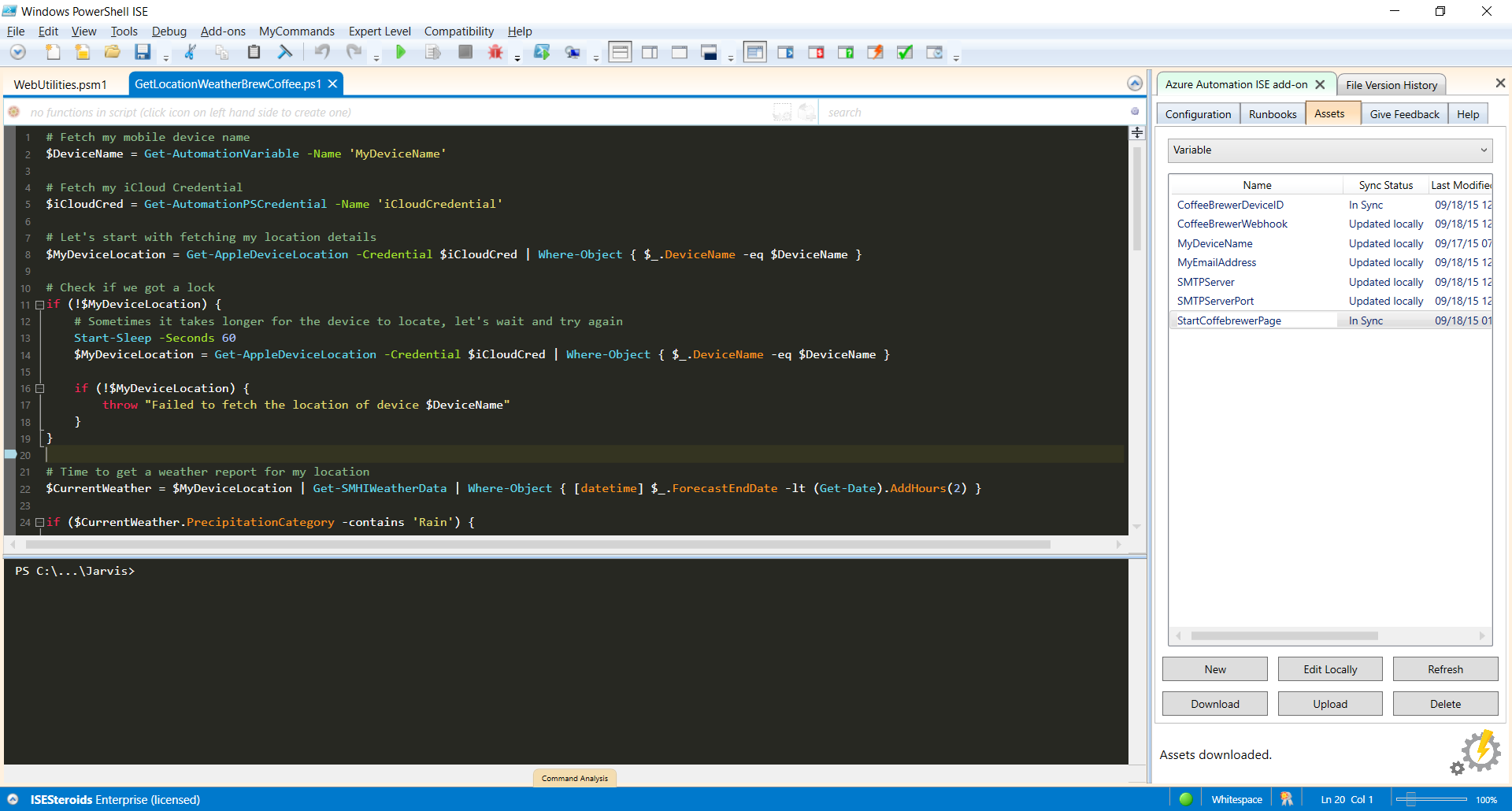

Finally, it’s time to wrap those functions up in a runbook.

The runbook could look something like this (dont look at this as a runbook best practice template, it’s not 🙂 ):

# Load the credentials needed

$AzureCredential = Get-AutomationPSCredential -Name 'AzureCred'

$MatHemCredential = Get-AutomationPSCredential -Name 'MatHem'

# Log into to Azure

Add-AzureRmAccount -Credential $AzureCredential

# Set a few parameters and fetch the storage information

$ResourceGroupName = 'MyResourceGroup'

$StorageAccountName = 'MyStorageAccount'

$ContainerName = 'mailattachments'

$StorageAccountKey = Get-AzureRmStorageAccountKey -ResourceGroupName $ResourceGroupName -Name $StorageAccountName

$StorageContext = New-AzureStorageContext -StorageAccountName $StorageAccountName -StorageAccountKey $StorageAccountKey[0].Value

$Blobs = Get-AzureStorageBlob -Context $StorageContext -Container $ContainerName

# Filter out the attachment needed for this specific flow, only

# needed if you run multiple flows that look at attachments in the

# same container

$TargetedBlobs = $Blobs | Where-Object { $_.Name -match '^kvitto|^bonus' }

foreach ($MatHemBlob in $TargetedBlobs) {

if ($MatHemBlob.Name -match '^kvitto') {

# These are not needed so let's just remove them

Remove-AzureStorageBlob -Blob $MatHemBlob.Name -Context $StorageContext -Container $ContainerName -Force

}

elseif ($MatHemBlob.Name -match '^bonus') {

# These contain the actual bonus or discount codes, so lets download those

$LocalFileName = [System.IO.Path]::GetTempFileName()

Get-AzureStorageBlobContent -Blob $MatHemBlob.Name -Container $ContainerName -Context $StorageContext -Destination $LocalFileName -Force

# Fetch the text from the file

$BonusPdfText = (Get-PdfText -Path $LocalFileName) -split "`n"

# parse out the code itself

$BonusCode = ((($BonusPdfText -match '^Värdekod:') -split 'Värdekod: ')[1]).trim()

# Connect to the online grocery store and post the code (+some error handling and notifications)

if (-not $Global:MathemSession) {

Connect-Mathem -Credential $MatHemCredential

}

if ($BonusCode) {

try {

$Results = Add-MatHemBonusCode -BonusCode $BonusCode -ErrorAction Stop

}

catch {

if ($_.ToString() -like '*Bonuskoden har redan använts i en annan order*' ) {

Write-Warning "Bonus code $BonusCode have already been used. Cleaning up blob..."

Send-PushNotification -Message "Bonus code $BonusCode from $($MatHemBlob.Name) have already been used. I'm cleaning up the blob."

Remove-AzureStorageBlob -Blob $MatHemBlob.Name -Context $StorageContext -Container $ContainerName -Force

Continue

}

elseif ($_.ToString() -like '*Felaktig bonuskod*' ) {

Write-Warning "Bonus code $BonusCode is invalid. Notifying master..."

Send-PushNotification -Message "The bonus code $BonusCode from $($MatHemBlob.Name) was invalid. Please take care of this for me!"

Remove-AzureStorageBlob -Blob $MatHemBlob.Name -Context $StorageContext -Container $ContainerName -Force

Continue

}

else {

Write-Warning "Failed to add bonus code $BonusCode from $($MatHemBlob.Name). The error was $($_.ToString())"

Send-PushNotification -Message "Failed to add bonus code $BonusCode from $($MatHemBlob.Name). The error was: $($_.ToString())"

Continue

}

}

Send-PushNotification -Message "Bonus code $BonusCode from $($MatHemBlob.Name) have been added with the response: $Results"

}

}

}

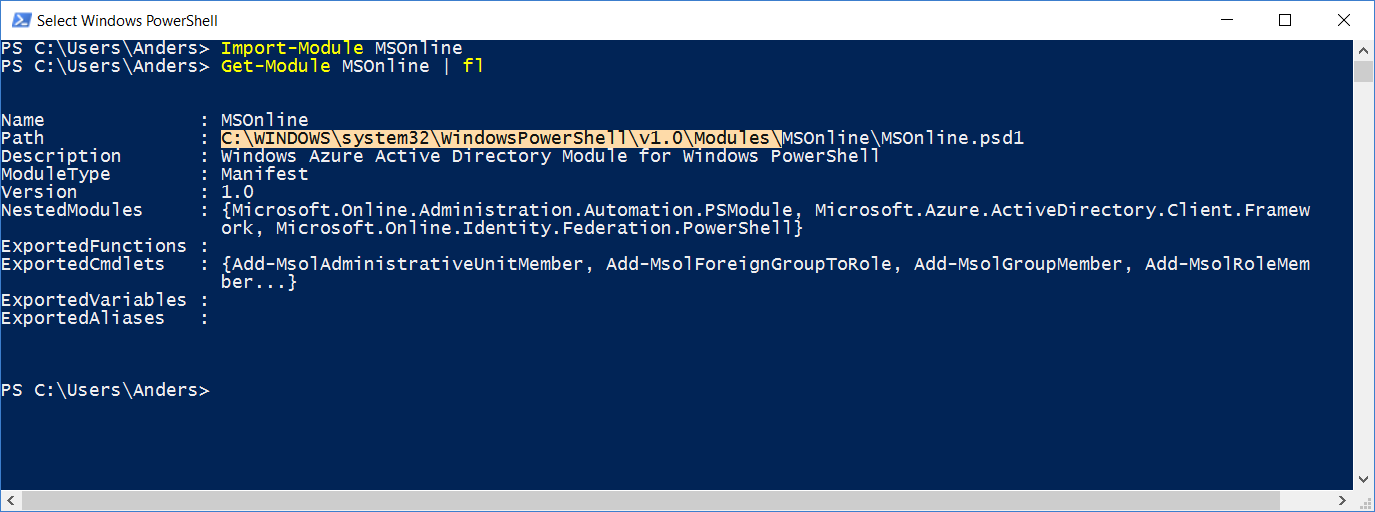

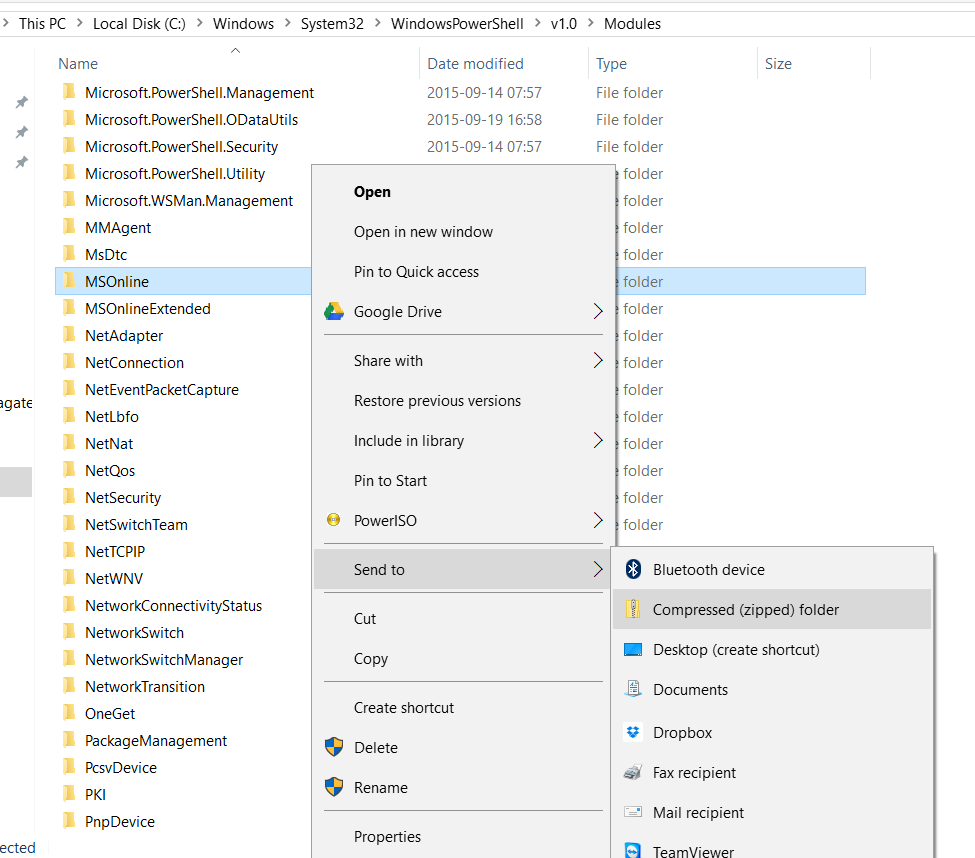

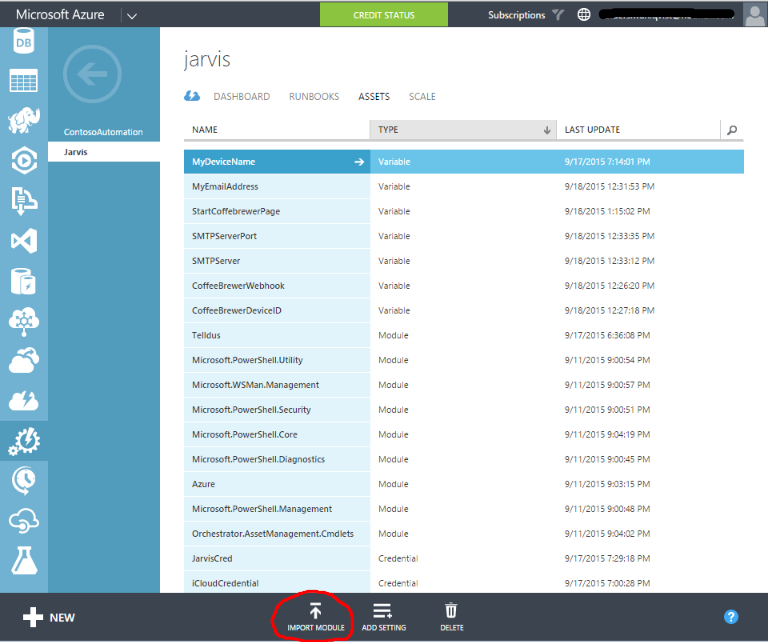

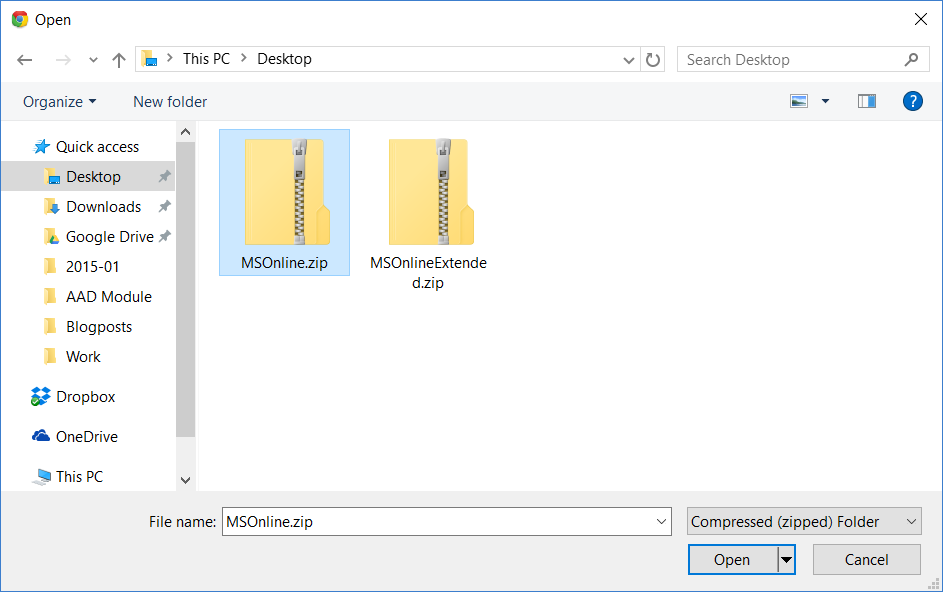

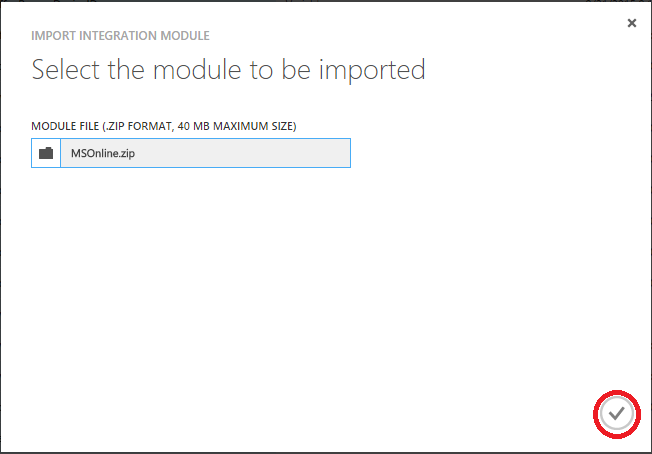

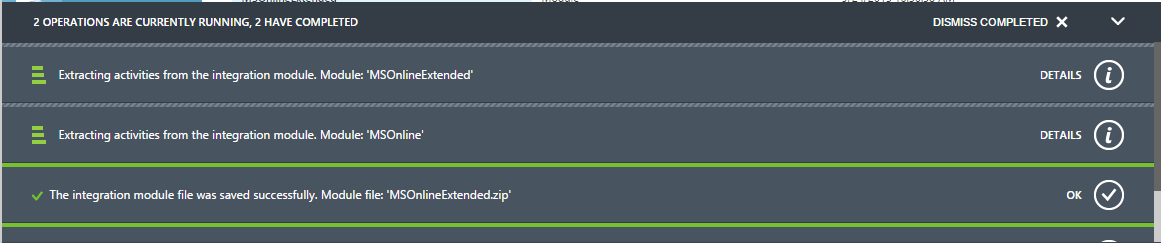

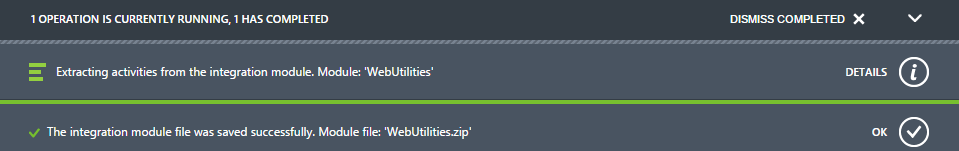

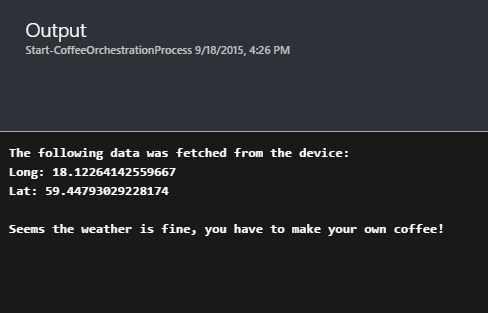

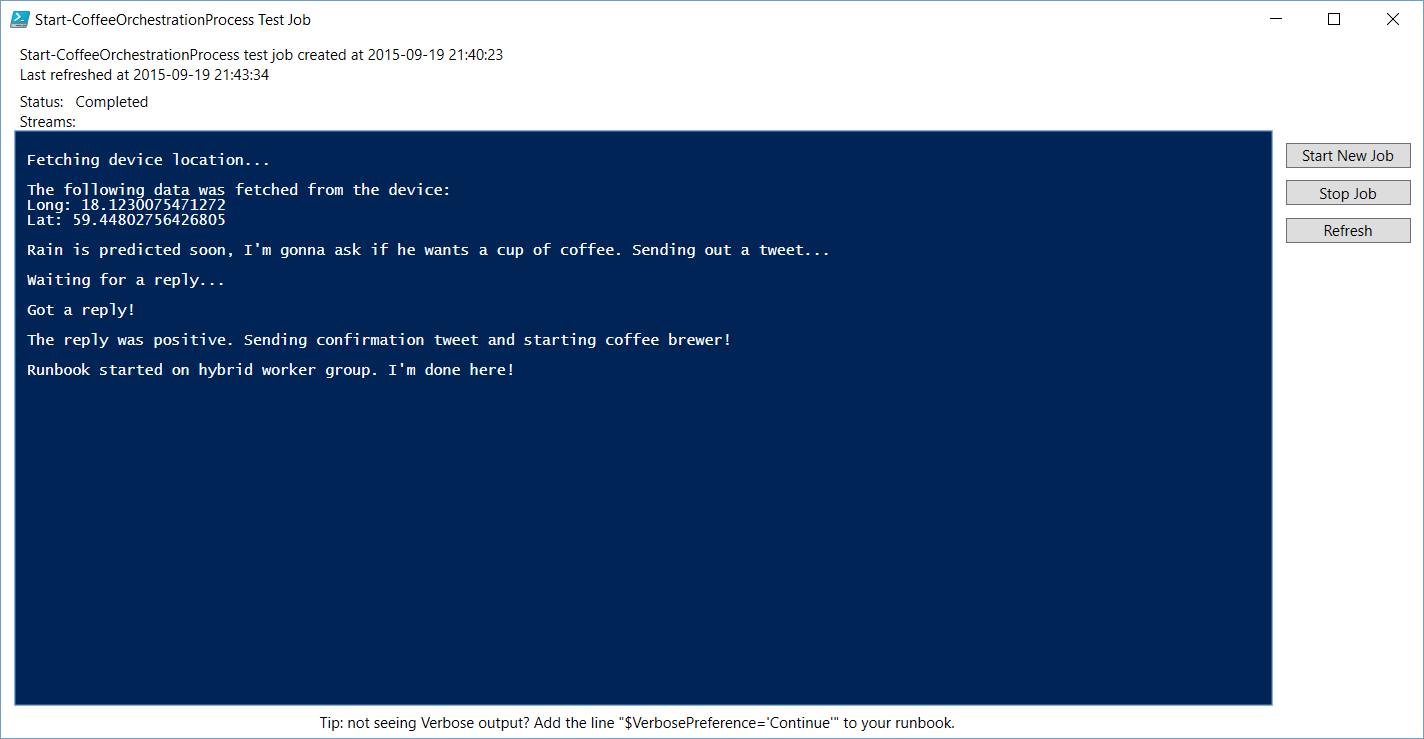

Time to schedule that in Azure Automation, and make sure all the modules needed are available for it when it runs! (I run this on a hybrid worker)

Conclusion

While I have had a few issues with Microsoft Flow along the way (it is still in preview after all), it seems like a really cool service. And since you can make a http request to a webhook in Azure Automation, and/or just integrate them through some other service like the blob storage example in this post, the possibilities are pretty much endless.

So, as always… Keep automating anything!